Training my handwriting model: an update

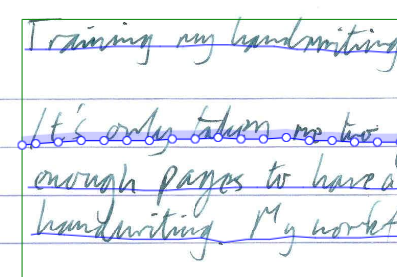

My handwriting!

It’s only taken me two years, but I’ve finally got around to transcribing enough pages to have a first try at training a model on my own handwriting. My workflow has been (roughly) this:

- Write something out longhand with pen and paper

- Scan the pages and upload to Transkribus

- Run layout detection, tweak any arrors and transcribe the text on desktop (while listening to some music)

A few useful points I’ve picked up so far:

- My old, inherited multifunction printer has an option to scan directly. to FTP, and Transkribus allows upload via FTP so I have set up a scan target to send the documents straight to Transkribus from where I can add them to a collection and process them

- It’s important to transcribe accurately what was on the page, not what you intended to write, but my brain tries to autocorrect as it goes so I have to concentrate on suppressing that!

- Where I do notice an error while I’m writing, or change my mind about what I want to say, I try to clearly strike it out on the page then apply a gap tag while transcribing as recommended in the docs.

- I’ve played a little bit with inserting Markdown-style formatting characters when I want to emphasise something, but only when it won’t disrupt my flow; for links I mostly just include

<link here>as a reminder.

So now I’ve got 25 pages, the minimum recommended for training, I ran a couple of training jobs, using that as ground truth (one without and one with an existing model as basis) and the initial results on the validation set seem promising. I wasn’t expecting perfection — my handwriting is too messy for that — but it’s already performing much better than my experience with OneNote, which seems to be one of the best out there for my writing, and the recognition on my reMarkable tablet. I just used the default PyLaia settings in Transkribus so I could probably tune it to be a little better on the current training data, but I’m not sure that would be worth the effort at this point.

Next I have two things I want to do!

- Use it! Now I have something working I can switch from transcribing by hand to transcribing with the model and correcting errors. That should allow me to grow my ground truth dataset a bit quicker while also being useful in digitising my notes (and writing blog posts like this one!)

- Figure out the tools for myself and reproduce this on my own computer. Except when playing games I rarely use the full power of my desktop so it would be great to be able to put it to some use in the background while I write emails and reports. That would also mean I’d be using a resource I’ve already paid for rather than paying for more Transkribus credits, and give me more flexibility to learn more deeply how this all works by running multiple trainings with different parameters. Finally it would eliminate the need to upload my private notes into the cloud and give mo more peace of mind about their privacy.

Thats all for now, but I look forward to posting more updates!

Webmentions

You can respond to this post, "Training my handwriting model: an update", by:

liking, boosting or replying to a tweet or toot that mentions it; or

sending a webmention from your own site to https://erambler.co.uk/blog/training-a-handwriting-model-update-1/

Comments

Powered by Cactus Comments 🌵